Inspired by SPACEX – ISS Docking Simulator, I built a hypothetical replica of the SpaceX dragon 2 vehicle to manually pilot towards the docking station of the ISS (International Space Station).

In this VR simulation, you can

- Pilot crew dragon vehicle towards the docking station.

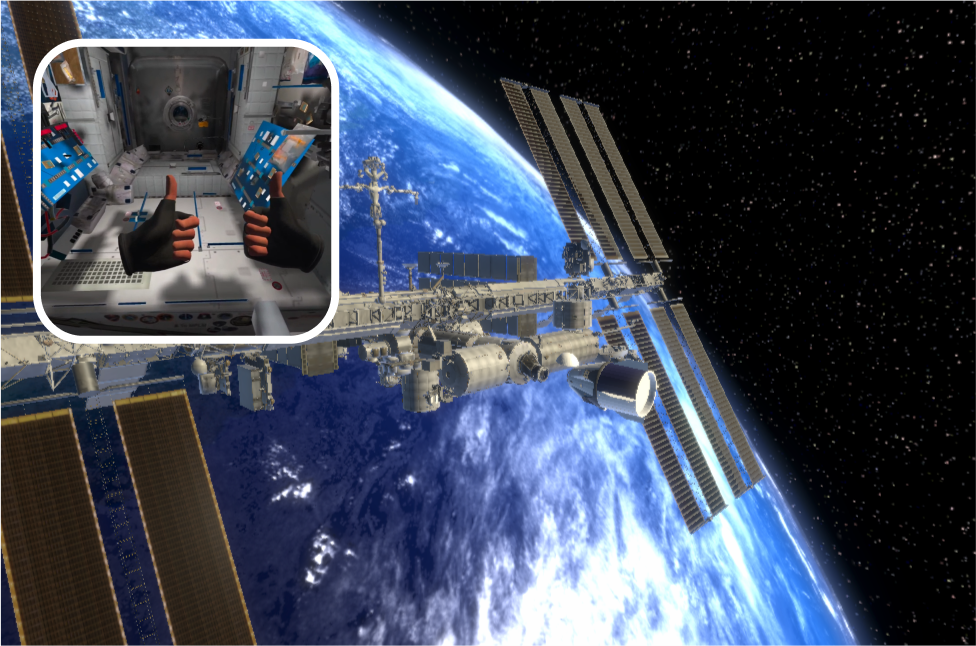

- Explore the ISS.

- Use your virtual

This experience was built as an educational experience to provide the experience of piloting a space vehicle.

Pilot Experience

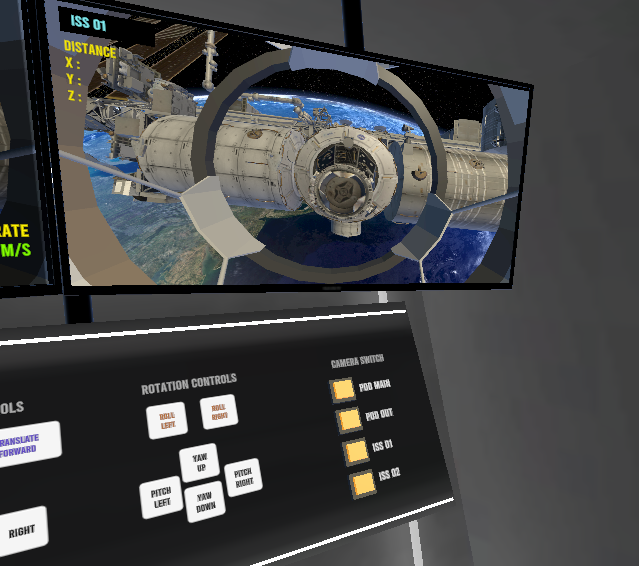

I replaced the crew dragon touch screens to buttons for better interaction in VR. Using the control panel, the pilot can manipulate the following controls.

- 2-degree position control

- Forward movement speed

- 3-degree rotational control (yaw, pitch and roll)

Similar to the Crew Dragon, the docking simulation requires the adequate speed and rotation to directly connect to the docking station. For this proper speed and rotational speed details are displayed with respect to the orientation of the ISS.

When all the numbers are within the green zone, the docking will be successful, and the user will be taken inside the ISS where they can explore.

Additionally, I added a camera station where the user can check the position of the vehicle and check how far it is with the ISS.

Here is a video demonstration of the docking. This is a very slow process which requires precision. I recommend watching it in high speed.

Exploring the ISS Station and outside

My main goal of this project was to build the docking experience. Additionally, I built a simple exploration scenario where the user can fly around and outside the ISS.

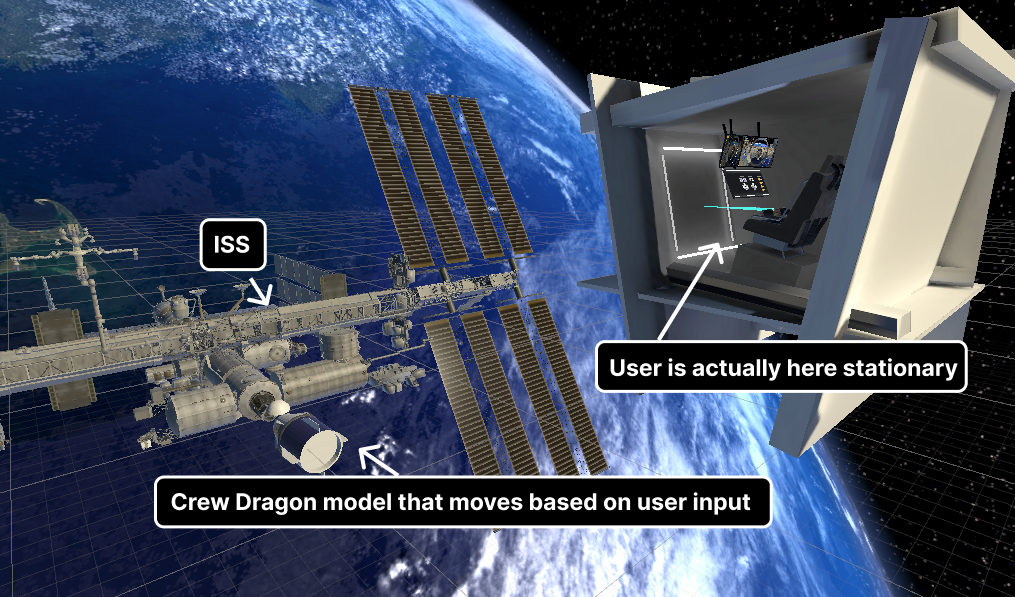

In this scene, the user seems that they are inside the vehicle, but they are placed inside a stationary pod that is closed. The cameras are attached to the vehicle model that moves based on the input values. The reason for having this is to provide enough space for the user to move their controllers without confined into the moving object.

To build the experience I used Unity 2023 with the XR interaction toolkit. Custom components in C# were created for the vehicle movement. I used only two cameras apart from the VR camera for the screen rendering. The rendering rate of the camera was reduced in order to increase the performance. All the lights in the scene are pre-built to reduce the batching count.

The project is available for Meta Quest 2,3 and Oculus Rift headsets. If you want to give it a try, please contact me for the installation files.

This project is a collaboration made with the IP rights under Haissam Wakeb, an AI and Advanced technologies professional.

Leave a Reply